Artificial intelligence (AI) is rapidly bringing new levels of automation and insight to clinical studies, driving smarter trial designs and more insightful data analysis. At the same time, AI creates new security, privacy, and compliance risks that may be slowing its adoption.

Good clinical practice (GCP) principles can coexist with AI’s benefits, provided study teams understand its capabilities and how to use these systems responsibly.

Achieving these goals requires demystifying technology while reinforcing the human aspect of AI use: the oversight, ethics, and empathy needed to use this technology with confidence.

Why AI security in clinical trials matters now

As Predrag Gaic, Viedoc’s Information Security Officer, puts it, “Most people connect AI only with chatbots. They consider AI as just another search engine. For clinical trials, AI can bring much more value in everyday working processes and contribute to faster and better execution of clinical trials.”

A paper published in the "Journal of the Society for Clinical Data Management" (JSCDM) on AI in clinical trials, co-written by Viedoc’s Senior Regulatory Advisor, Alan Yeomans, spells out several significant AI use cases, including improving and optimizing:

- Trial design

- Patient recruitment and site selection

- Remote patient monitoring

- Patient engagement and protocol adherence

- Data analysis against published articles, reports, and metrics

Predrag says the benefits are clear but carry significant risks. “The more AI is used in clinical trials, the greater the risk that personally identifiable or other sensitive clinical trial data could be compromised, corrupted or exploited.” Minimizing the risks, he explains, starts with truly understanding how the technology works.

Common misconceptions and concerns

The apprehension about using AI in clinical research often stems from misunderstandings about how it functions, along with legitimate concerns about privacy, security, and compliance. For example:

- Myth: “AI’s output can be taken at face value.” While using AI can help control costs and accelerate workflows, Predrag warns that AI is not always 100 percent correct. He stresses that humans should always validate the system’s results.

- Myth: “Using anonymized data eliminates privacy risks.” In truth, advanced AI models can re-identify that data, and potentially the patient. This myth stems from the idea that AI tools are not subject to governance rules, but, as Predrag explains, “When it comes to information classification and acceptable usage of data, the rules for AI should be the same as for any other IT system.”

- Myth: “Customer resistance can be overcome once the system is in use.” Even well-intended, well-thought-out features can be rejected if trust is lacking. Transparency and explainability are essential to building trust.

As the JSCDM article points out, complex AI models can appear to be "black boxes” that magically generate answers. AI tool vendors must pay attention to their systems' auditability to help users trust their reliability and take advantage of their benefits.

/SDL/sdl-woman-laptop-serious.png?width=500&height=362&name=sdl-woman-laptop-serious.png)

Best practices and workflow accelerators

Fortunately, most, if not all, of the issues surrounding the use of AI can be addressed through governance, training, and compliance:

- Regulations, such as the EU Artificial Intelligence Act and international standards, including ISO 42001, provide frameworks for responsible AI development and use. Regulators are working with industry leaders to help define practical rules through efforts such as the European Medicines Agency’s (EMA’s) GCP Inspectors Working Group.

- Industry-wide initiatives, like JSCDM, help support compliant AI deployment by providing a hub for practitioners to learn about AI's real-world implications and how to manage these systems effectively.

- Organizations can and should establish clear guidelines for AI use. Predrag suggests leaders consider what is acceptable: “Is it third-party tools? Chatbots and assistants? Or do you plan to include AI as part of a product offering? Making these decisions early is an important starting point.”

- External stakeholders should also have a say. As Predrag says, “It’s important to understand what is acceptable for them and to identify any red flags early on. It’s also essential to share your plans and understand their expectations around product features and compliance.”

- Enterprise licensing can address many user concerns. Predrag says that since the vendor has been through the vetting and onboarding process, the risks of these AI tools can be reduced dramatically.

Even with guardrails in place, data protections must be continually enforced, Predrag notes, adding, “It’s important to be proactive but also to keep a close eye on what is happening in the organization. The employee is the key element in responsible and secure AI usage.” In other words, it’s up to internal users to pay attention to what data is being entered or shared, and to verify the output of the AI system.

Achieving this requires change management that covers not only the technical side of integrating AI but also the human aspects. Training, upskilling, and reinforcement can help users be more effective, and leaders should actively listen to concerns and answer questions transparently to provide confidence in the system.

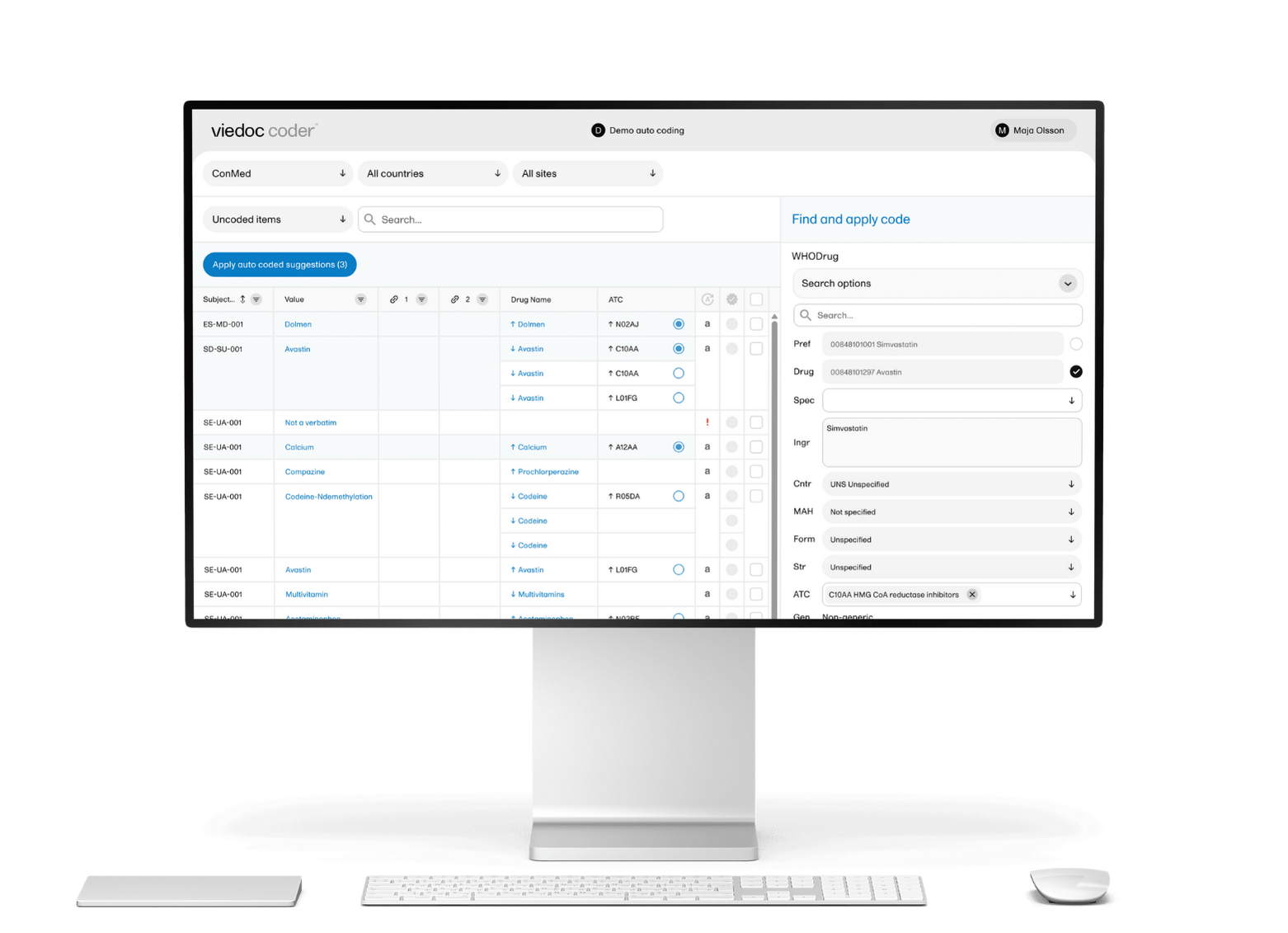

Viedoc’s approach: how the industry supports responsible AI use

AI vendors have an opportunity and an obligation to drive AI ethics forward. Study teams should look for solutions that deliver:

-

Clear data governance policies, along with visibility into how the tools collect, store, and share information

-

Enterprise licensing and private environments, to ensure proprietary data is never used to train public models

-

Compliance and auditability, adhering to laws, standards, and frameworks designed to enhance security and transparency

Predrag says privacy and security are paramount at Viedoc. For example, Viedoc prohibits using AI to process sensitive data and ensures clinical data stays inside a secure environment.

Viedoc’s tools, including customer-facing applications such as AI chatbots, include human-in-the-loop oversight. Predrag says Viedoc has scaled back or adapted planned AI features based on customer input, which builds trust and enables adoption of the platform.

Effective AI use isn’t just about the technology. It’s about building trust in the systems and the results they generate. Viedoc’s AI strategy is centered on privacy, security, and compliance, enabling clinical trial teams to focus on improving outcomes.

Building trust in secure and responsible AI for clinical trials

As AI becomes more integrated into clinical trials and research, the stakes for data security and compliance have never been higher. To see how Viedoc approaches security and compliance with practices rooted in industry standards and regulatory requirements, explore our security and compliance principles.

/SDL/sdl-woman-laptop-ui.png?width=624&height=405&name=sdl-woman-laptop-ui.png)

/new-year.jpg)

/female-doctor-with-tablet.jpg)