My desk was covered with paper CRFs, binders and checklists in Excel. I had only one database for clinical values and very rarely used meta data – if at all. External data was typically entered into CRF’s from lab lists, and most often only basic data was captured. In complex studies, we often had 3-5 binders of CRF forms per patient.

Naturally, these methods were error prone, slow, costly, and labour intensive. The period from patient visit to data entry was often 3-12 months. I remember constantly chasing monitors to chase site staff to fill in paper CRF pages, and queries took a long time to resolve. Handwriting was notoriously difficult to decipher, and you often had to ‘interpret’ what was written.

A data manager at this time was responsible for building the database, often with the help of a “self-taught tech guy”, and there was little standardisation of processes, methods, variables or tools. The data management team varied with the size of a project, and when a database lock loomed, teams could expand rapidly with new, lesser-knowledgeable team members from other projects. The data manager was responsible for the overall management of data entry, staff and teams, and double data entry of all CRF pages was standard. Even in relatively small studies, quite a few people could be assigned to a project. The largest study I ever ran was a 15,000-patient cardiovascular study that peaked with approximately 15 data management staff in the last 6 months before the database was locked.

Though we had relatively simple tools, we discovered a case of fraud at one stage. We’d come across a site with made-up patients. It was an eye opener for me that fraud could exist in science, even when the ultimate objective was to save patients’ lives.

The data management teams typically consisted of a lead data manager who managed one or more data entry groups and could come from a variety of disciplines. Some data managers were nurses, statisticians and some with computer science degree.

During this period, data managers often came into the process late. They ‘only’ had to take care of the data. They were not or only very rarely involved in the creation of protocol or the CRF. The regulatory requirements were minimal until the introduction of GCP in 1997, when uniform requirements suddenly became more standard, especially in the larger clinical study regions of that time, such as the USA, Europe, and Japan.

At this stage, the CRFs were still basic, not too complex. The internet was young, and each browser had its unique quirks. Internet connections were slow and computer literacy was very low, making it complex at best to runs studies. We often had to train site staff in basic computer skills. Modems were inefficient, and when a connection was interrupted, you had to restart the entire connection process. Most of the data entry systems being used were still mimicking the paper process, instead of making use of the new and improved features and processes the electronic systems made possible. The process remained clunky and time-consuming, and staff on sites working with data entry by computer did not appreciate the advantages it offered, since other sites still largely used a paper-based process. More often than not, the browsers didn’t work with the EDC systems in place, and the operating systems needed updating.

During this period, the first electronic ePROs appeared. You had to download the data separately, either onto your computer desktop for a transfer of the data to the data manager, or you had to upload it directly into the EDC system. Imaging became more popular and hard disk drives were sent by courier from the sites to the central reader. If your trial had ECG’s to be read, the process was manual and relatively simple tools were used to measure the different waves and record the data into the EDC system.

New systems began to emerge, like the Clinical Trial Management System (CTMS), and provided more control of the operational aspects of monitoring and project management. There was no link, however, between the CRF databases and the CTMS, so much extra work was required to control and synchronize the databases. If you needed to run a similar as the paper trial referred to above, the tools and processes required a much smaller data management team. Instead of some 10-15 data managers, a few data managers and data entry staff were often adequate.

When the first external randomisation systems appeared, a phone was needed to acquire a randomisation number and the study drugs, and the site and enrolment number, as well as other study related factors, were entered manually by phone.

With time, the use of electronic tools increased. Trials became more complex and data management got engaged in projects earlier. Data managers had to get databases and processes ready before the start of projects, and the addition of electronic tools often caused delays. The effect was that projects often weren’t ready by the initially estimated go-live date. Training of the sites was essential, and much time at Investigator Meetings was devoted to training teams in the new processes and tools.

In 1997, the new 21 CFR part 11 guideline was released with requirements on, among other things, audit trail and electronic signatures. At the time, very few systems, if any, were able to meet these new requirements. The FDA had to withdraw the regulation for a period and later reinstate it, as the industry simply wasn’t ready. These days, of course, things like electronic signatures and audit trails are standard in any computerised system– not only in the clinical trials sector.

Despite the arrival of Clinical Trial Management Systems and the availability of Trial Master File systems, there wasn’t a huge uptake by the industry. They were very expensive systems and only large organisations were able to invest in them.

From the early 2000s to approximately six years before the COVID-19 pandemic, systems evolved rapidly, and trials became more user friendly. Integrated exchange of data from one system to the next came into force. Data exchange happened more and more often in real time. You no longer had to wait until a study ended to get your hands on needed data. Clinical trials were growing increasingly more complex and the early days of simple study designs that were straight forward were less common. Complex study designs, such as Adaptive trials, Umbrella, and Basket designs, were introduced.

Because of improved technologies, standardisation of both technologies and data formats, and a much better internet, you could now make more sense of data in reporting tools like Risk Based Monitoring. The Clinical Trial Management System’s (CTMS) started getting integrated with the EDC system. And finally, the electronic Trial Master Files (eTMF) entered the scene and became the central repository for all documents defining and managing the study, including applications to authorities and documentation generated by the study, such as study reports and monitoring evidence.

‘Wearables’ was the buzz word in the mid 2010s, and we started exploring the value they could bring to clinical trials and asking if they could connect us even more adequately with study databases. Once again, the data management role began to change, and data scientists founds themselves more and more involved in the clinical trial process.

As different technologies in clinical trials provided improved integration and connected us better, a new era for data managers was born. Not only would they get more involved in clinical trials and process the trial data, but more in the meta data around the clinical trial.

The greater technology eventually meant that data managers were the only staff qualified to take ownership of data control. This was not ideal, of course, as it took away the focus from the key task: the process of capture, management of code, checking and presenting the data, derived or calculated, and capturing meta data from the clinical trial.

More technology tends to bring more challenges, and now there was more to do before the trials were even started. While trials had been ‘back heavy’ previously, now they were ‘front heavy’. More was needed pre-trial to be able to start a study. Data managers were no longer providing a service or simply taking care of paper CRFs when they came in. Data management was now an essential part of the planning of the clinical trial. Deciding which technology was relevant, the processes that would support it, and who needed which data in what format and when, was now an important function of the role.

Timely communication was also essential for the additional technologies and adapted processes required by each trial.

We could all see where things were heading with the ever-evolving methodologies of clinical trials, but no one thought it would happen as quickly as it did, if at all.

When COVID-19 struck, there was an immediate impact on clinical trials. According to the WCG Knowledgebase, in July 2020, the number of sites open for enrolment was down 89 % and new study starts were down by over 50%. The pandemic affected therapeutic areas differently, but the only major area with an increase in number of studies was Infectious Diseases. All other major disease areas were down. Significantly. The Oracle report on the Covid-19 experience presented the leading causes of the delays in clinical trials: longer enrolment times (49 %), protocol amendments (45 %, as the studies needed to adjust to the new situation) and paused studies (41 %).

Remote monitoring, televisits, electronic consent and Direct to Patient drug deliveries gained both attraction and traction by some of the more forward-looking organisations prior to the pandemic. However, most organisations (both on the sponsor and vendor side) were not looking to fix something that wasn’t broken. The processes were well accepted even though they were labour intensive, time consuming and costly.

When visiting sites suddenly became impossible, we had to rely on remote technologies. The patients couldn’t or didn’t want to visit the sites, and suddenly, that which had been perceived as a risky new way of managing clinical trials was the obvious solution.

Changing processes during an ongoing trial is difficult. Many trials were either cancelled or greatly delayed. In some cases, however, the study design and objectives were adapted to the new reality.

COVID-19 has shown us that we can change rapidly when we need to. Among the reasons we will not turn back to our former ways: we cannot travel to the sites as much as we used to, and patients do not want or cannot travel to sites (at least not as much as they used to). We cannot forget environmental aspects either. This has already had a huge effect on the way we can and want to travel.

Whenever possible, we should adapt the processes to the patients and the sites’ needs. Doing things remotely makes trials much more efficient for all of us. Televisits are accepted by us all, and though we are Zoom-fatigued, we must ask ourselves if we really want to go back to traveling as much as we once did. Certain procedures can only be done on site, but others can be managed from home or partly managed from primary care centres closer to our homes.

The challenges are abundant, of course. Technologies changes quickly, and sometimes it’s hard to decide to use a technology that we might only be able to use for a specific trial.

Nowadays study designs are often complex, and, in many cases, processes are designed around a specific project rather than a standardised or generic process. How do we validate this? What can be reused? Are patients ready to have devices at home – or to bring them with then when they travel? Obviously, Direct to Patient (DTP) drug delivery has positive qualities, but the burden is suddenly on the patient to keep track of their own medication records, use diagnostic tests and manage data capture–– all of which was previously done by healthcare professionals. How do we ensure compliance? How do we make certain procedures are carried out in the proper way? I am certain this works well at times and many steps are already carried out in an acceptable way, but can we really differentiate between what’s been done properly or not?

There are also changes to business models. Historically, CROs have got a large portion of their revenues from clinical operations, particularly in monitoring. The data manager role is changing into a data scientist role with much more responsibility. Keeping track of data, meta data, adding more advanced methods like artificial intelligence (AI) and machine learning (ML) processes, and timely communication with a much broader team in the projects to run clinical trials more efficiently and to maintain quality, is the new reality.

Clinical trials are employing more data sources, with most data now coming from external or non-EDC data sources. Data integration and reconciliation, as well as the growing complexity of how we review data, have all contributed to more challenging trial timelines, like the study start up timelines. I believe we need to completely rethink and streamline data flows, processes and study design– to better manage external data providers and sources. The process is currently very front heavy, and even though the tools are often flexible to accommodate different processes and integrations with different sources, the protocol must be approved by the authorities and the IRB. Even minor changes to a study protocol can take a long time before they’re implemented.

Standardisation in data formats, study modules and therapeutic guidelines have all massively helped the industry make studies easier, but with the overall burden of much more data, it seems we are back at square one. According to research done at Tufts University, studies have more than tripled their number of data points and more than doubled their number of procedures carried out on the patients. Not to mention the fact that treatments with the most low-hanging fruit in terms of diseases have been studied, and the more complex conditions are what remain. Or that you now have more endpoints and or more complex endpoints to prove that your new drug is safer and/or more efficacious than what’s already on the market. Although the promise of decentralised clinical trials is very promising and interesting, I think we are still stuck in the same fold. Clinical trials are complex to carry out, cost much money and take significant time to conduct. Going back to the old way, however, is not an option. We still have lots more to do.

What are some of the new technologies being used to streamline the flow of data? How are clinical data platforms providing data access and review capabilities to key stakeholders?

We have already mentioned many of the new technologies and processes and how they came about. I’d like to emphasise some of the more important ones:

eConsent

A far more flexible and patient-friendly way to inform study participants in a study, so that they better understand the disease they have, and what procedures are going to be carried out. The process of getting reconsent in case a study is changed is also much more flexible. Not only text, images and film are used to help a participant. Quizzes can be used to verify that they have understood.

TeleVisits

Both the participant and the sites benefit from TeleVisits, as patients do not have to visit the study sites as often. The participant and sites can use their own or provisioned devices to communicate with each other, and if devices are used to follow up compliance and capture data from procedures and questionnaires, everything can be followed up in a convenient way for the participant. The elimination of non-essential travel makes it much more efficient. Using TeleVisit technologies is highly appreciated and gives all, sites and participants alike, greater flexibility, while allowing more participants to enrol in and remain involved in clinical trials.

Direct To Patient (DTP)

DTP is when study medication/devices are delivered directly to the participant’s home or office. Instead of having to travel to the site, the treatment is directly delivered to you. It’s essential, of course, that the participant can store the treatment properly according to instructions or deliver the treatment on time for correct administration. You can also combine DTP with ambulatory nurse visits to check that the procedures and study drug administration is done properly. This increases the requirements of the logistical procedures significantly, as they’re often designed to deliver drugs to depots and study sites, so the fact that DTP adds a whole new level of complexity should be considered.

Study sample management

This approach allows participants to visit their usual laboratory and primary care centre to withdraw blood that’s then shipped to the central laboratory for analysis. With time sensitive samples and/or samples that need preparation, multiple factors will need to be considered. Like DTP, this approach increases the requirements of the logistical procedures.

Connected devices

There’s beauty in providing participants with devices that measure compliance, progress of disease and their treatment. There are many factors to keep in mind, however, including device malfunction, battery status and charging status, different technical standards in different regions and cultural differences (e.g., date formats, languages, electrical outlets variations and more). Each of these factors can create challenges, but device use that is carried correctly can help a study a lot in terms of timely and high-quality data that was previously impossible to acquire. Devices can give us all a much better understanding of the diseases we study, as well as their natural progression.

Improved reporting

Modern tools allow us much better insight into the way a study is progressing, both in terms of data and meta data. The meta data allows us to run the study more efficiently and proactively.

Bring Your Own Device (BYOD)

The penetration of smartphones is a global phenomenon. Even in developing countries, modern devices like smartphones are readily available. Participants can do more on their own using their own devices. This adds a lot of flexibility to clinical trials, but also presents new problems to consider, such as connectivity, standardisation of measurements, bandwidth, and generality of results. Many in the clinical trials industry are reluctant to use BYOD, however, it is likely this will need to change as participants increasingly feel inconvenienced by having to use many different devices.

All these practices and methods – from televisits and decentralized trials, to risk-based monitoring and remote monitoring– were a part of the ‘big shift’ that started five to ten years ago and gained significant traction in the past two years. It will take much time and more resources, however, to fully embrace, embed and scale these approaches into clinical trials.

In the long run, study participants will want, and may even require, much more control of their own data, how it is used and shared.

And as high data quality grows in importance – you must ask, what will attract the modern data manager? How will you make your organization more attractive? This is an important topic, of course, and I intend to address it in a later article. Meanwhile, one of my dear colleagues – Alan Yeomans also co-authored a similar article along with other industry experts, you can read it here.

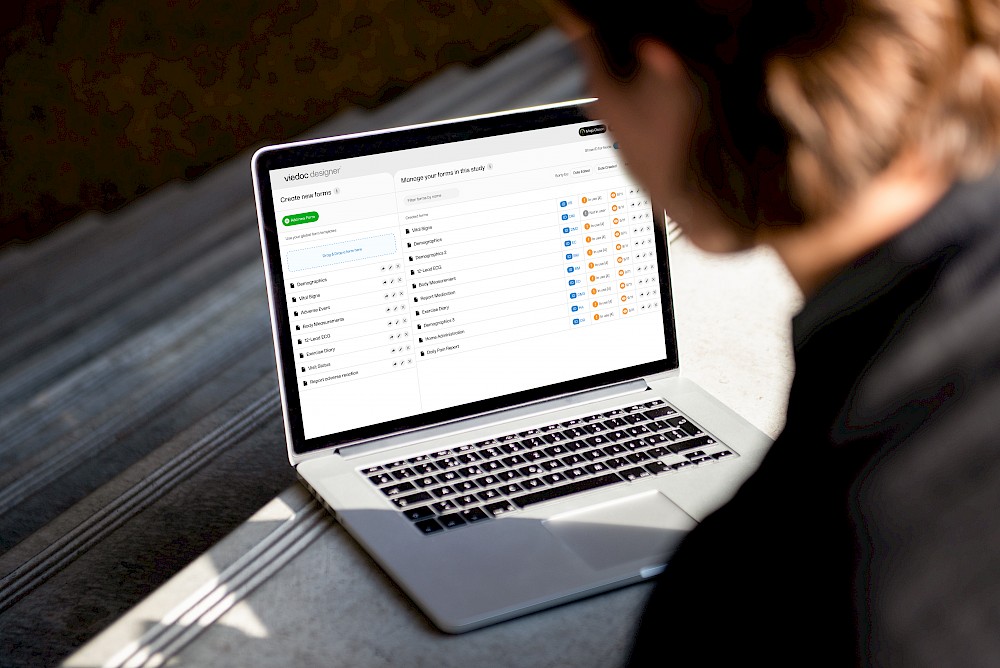

"Data capturing has been made easy because of viedoc, it is incredibly robust and easy to navigate"